“I was recently at a demonstration of walking robots, and you know what? The organizers had spent a good deal of time preparing everything the day before. However, in the evening the cleaners had come to polish the floor. When the robots started, during the real demonstration the next morning, they had a lot of difficulty getting up. Amazingly, after half a minute or so, they walked perfectly on the polished surface. The robots could really think and reason, I am absolutely sure of it!”

Somehow the conversation had ended up here. I stared with a blank look at the lady who was trying to convince me of the robot’s self-awareness. I was trying to figure out how to tell her that she was a little ‘off’ in her assessment.

As science-fiction writer Arthur C. Clarke said: any sufficiently advanced technology is indistinguishable from magic. However, conveying this to someone with little knowledge of ‘the magic’, other than that gleaned from popular fiction, is hard. Despite trying several angles, I was unable to convince this lady that what she had seen the robots do had nothing to do with actual consciousness.

Machine learning is all the rage these days, demand for data scientists has risen to similar levels as that for software engineers in the late nineties. Jobs in this field are among the best paying relative to the number of years of working experience. Is machine learning magic, mundane or perhaps somewhere in between? Let’s take a look.

A Thinking Machine

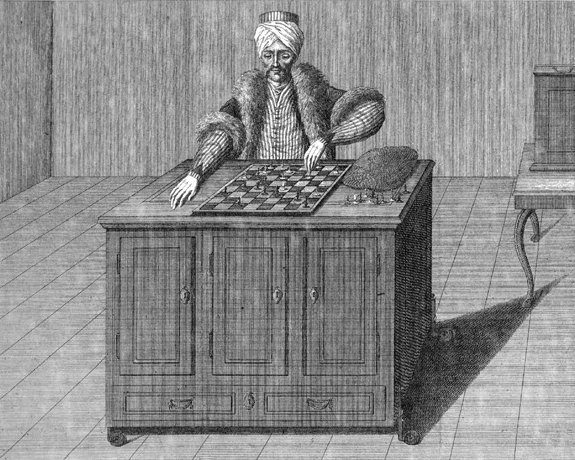

Austria, 1770. The court of Maria Theresa buzzes. The chandeliers mounted on the ceiling of the throne room cast long shadows on the carpeted floor. Flocks of nobles arrive in anticipation of the demonstration about to take place. After a long wait, a large cabinet is wheeled in, overshadowed by something attached to it. It looks like a human-sized doll. Its arms lean over the top of the cabinet. In between those puppet arms is a chess board.

The cabinet is accompanied by its maker, Wolfgang van Kempelen. He opens the cabinet doors which reveals cogs, levers and other machinery. After a dramatic pause he reveals the true name of this device: the Turk. He explains it is an automated chess player and invites Empress Maria Theresa to play. The crowd sneers and giggles. However, scorn turns to fascination as Maria Theresa’s opening chess move is countered by the mechanical hand of the Turk by a clever counter move.

To anyone in the audience the Turk looked like an actual thinking machine. It would move its arm just like people do, it took time between moves to think just like people do, it even corrected invalid moves of the opponent by reverting the faulty moves, just like people do. Was the Turk, so far ahead of its time, really the first thinking machine?

Unfortunately: no, the Turk was a hoax, an elaborate illusion. Inside the cabinet a real person was hidden. A skilled chess player, who controlled the arms of the doll-like figure. The Turk shows that people can see and have an understanding of an effect, but fail to correctly infer its cause. The Turk’s chess skills were not due to its mechanics. Instead they were the result of clever concealment of ‘mundane’ human intellect.

The Birth of Artificial Intelligence

It would take until the early 1950’s before a group of researchers at Darthmouth started the field of artificial intelligence. They believed that if the process of learning something can be precisely described in sufficiently small steps, a machine should be able to execute these steps as well as a human can. Building on existing ideas that emerged in the preceding years, the group set out to lay some of the groundwork for breakthroughs to come in the ensuing two decades. Those breakthroughs did indeed come in the form of path finding, natural language understanding and even mechanical robots.

Around the same time, Arthur Samuel of IBM wrote a computer program that could play checkers. A computer-based checkers opponent had been developed before. However, Samuel’s program could do something unique: adapt based on what it had seen before. He roughly did this by making the program store the moves that led to games that were won in the past, and then replaying those moves at appropriate situations in the current game. Samuel referred to this self-adapting process as machine learning.

What then really is the difference between artificial intelligence and machine learning? Machine learning is best thought of as a more practically oriented sub field of artificial intelligence. With mathematics at its core, it could be viewed as a form of automated applied statistics. At the core of machine learning is finding patterns in data and exploiting those to automate specific tasks. Tasks like finding people in a picture, recognizing your voice or predicting the weather in your neighbourhood.

In contrast, at the core of artificial intelligence is the question of how to make entities that can perceive the environment, plan and take action in that environment to reach goals, and learn from the relation between actions and outcomes. These entities need not be physical or sentient, though that is often the way they are portrayed in (science) fiction. Artificial intelligence intersects with other fields like psychology and philosophy, as discussed next.

Philosophical Intermezzo: Turing and the Chinese room

Say, a machine can convince a real person into thinking that it – the machine – is a human being. By this very act of persuasion, you could say the machine is at least as cognitively able as that human. This famous claim was made by mathematician Alan Turing in the early fifties.

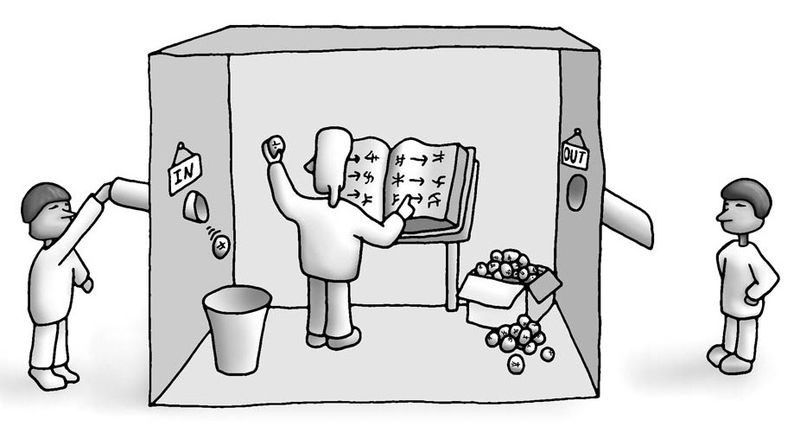

Turing’s claim just did not sit well with philosopher John Searle. He proposed the following thought experiment: imagine Robert, an average Joe who speaks only English. Let’s put Robert in a lighted room with a book and two small openings for a couple of hours. The first opening is for people to put in slips of paper with questions in Chinese: the input. The second opening is to deposit the answers to these questions written on new slips, also in Chinese: the output.

Robert does not know Chinese at all. To help him he has a huge book in this ‘Chinese’ room. In this book he can look up what symbols he needs to write on the output slips, given the symbols he sees on the input slips. Searle argued that no matter how many slips Robert processes, he will never truly understand Chinese. After all, he is only translating input questions to output answers without understanding the meaning of either. The book he uses also does not ‘understand’ anything, as it contains just a set of rules for Robert to follow. So, this Chinese room as a whole can answer questions. However, none of its components actually understands or can reason about Chinese!

Replace Robert in the story with a computer, and you get a feeling for what Searle tries to point out. Consider that while a translation algorithm may be able to translate one language to the other, being able to do that is insufficient for really understanding the languages themselves.

The root of Searle’s argument is that knowing how to transform information is not the same as actually understanding it. Taking that one step further: in contrast with Turing, Searle’s claim is that merely being able to function on the outside like a human being is not enough for actually being one.

The lady I talked with about the walking robots had a belief. Namely, that the robots were conscious based on their adaptive response to the polished floor. We could say the robots were able to ‘fool’ her into this. Her reasoning is valid under Turing’s claim: from her perspective the robots functioned like a human. However, it is invalid under Searle’s, as his claim implies ‘being fooled’ is simply not enough to prove consciousness.

As you let this sink in, let’s get back to something more practical that shows the strength of machine learning.

Getting Practical with Spam

In the early years of this century spam emails were on the rise. There was no defense against the onslaught of emails. So too thought Paul Graham, a computer scientist, whose mailbox was overflowing like everyone else’s. He approached it like an engineer: by writing a program. His program looked at the email’s text, and filtered those that met certain criteria. This is similar to making filter rules to sort emails into different folders, something you have likely set up for your own mailbox.

Graham spent half a year manually coding rules for detecting spam. He found himself in an addictive arms race with his spammers, trying to outsmart each other. One day he figured he should look at this problem in a different way: using statistics. Instead of coding manual rules, he simply labeled each email as spam or not spam. This resulted in two labeled sets of emails, one consisting of genuine mails, the other of only spam emails.

He analyzed the sets and found that spam emails contained many typical words, like ‘promotion’, ‘republic’, ‘investment’, and so forth. Graham no longer had to write rules manually. Instead he approached this with machine learning. Let’s get some intuition for how he did that.

Identifying Spam Automagically

Imagine that you want to train someone to recognize spam emails. Your first step is showing the person many examples of emails that are labeled genuine and that are labeled spam. That is: for each of these emails the participant is told explicitly whether each is genuine or spam. After this training phase, you put the person to work to classify new unlabeled emails. The person thus assigns each new email a label: spam or genuine. He or she does this based on the resemblance of the new email with ones seen during the training phase.

Replace the person in the previous paragraph with a machine learning model and you have a conceptual understanding of how machine learning works. Graham took both email sets he created: one consisting of spam emails, the other of genuine ones. He then used these to train a machine learning model that looks at how often words occur in text. After training he used the model to classify new incoming mails as being either spam or genuine. The model would for example mark an email as spam if the word ‘promotion’ would appear in it often. A relation it ‘learned’ from the labeled emails. This approach made his hand-crafted rules obsolete.

Your mailbox still works with this basic approach. By explicitly labeling spam messages as spam you are effectively participating in training a machine learning model that can recognize spam. The more examples it has, the better it will become. This type of application goes to the core of what machine learning can do: find patterns in data and bind some kind of consequence to the presence, or absence, of a pattern.

This example also reveals the difference between software engineering and data science. A software engineer builds a computer program explicitly by coding rules of the form: if I see this then do that. Much like Graham tried to initially combat spam. In contrast, a data scientist collects a large amount of things to see, and a large amount of subsequent things to do, and then tries to infer the rules using a machine learning method. This results in a model: essentially an automatically written computer program.

Software Engineering versus Machine Learning

If you would like a deeper conceptual understanding and don’t shy away from something a bit more abstract: let’s dive a little bit deeper into the difference between software engineering and machine learning. If you don’t: feel free to skip to the conclusion.

As a simple intuition you can think of the difference between software engineering and machine learning as the difference between writing a function explicitly and inferring a function from data implicitly. As a minimal contrived example: imagine you have to write a function f that adds two numbers. You could write it like this in an imperative language:

function f(a, b):

c = a + b

return cThis is the case were you explicitly code a rule. Contrast this with the case where you no longer write f yourself. Instead you train a machine learning model that produces the function f based on many examples of inputs a and b and output c.

train([(a, b, c), (a, b, c), (...)]) -> fThat is effectively what machine learning boils down to: training a model is like writing a function implicitly by inferring it from the data. After this f can be used on new previously unseen (a, b) inputs. If it has seen enough input examples, it will perform addition on the unseen (a, b) inputs. This is exactly what we want. However, consider what happens if the model would be fed only one training sample: input (2, 2, 4). Since and

, it might yield a function that multiplies its inputs instead of adding them!

There are roughly two types of functions that you can generate, that correspond to two types of tasks. The first one, called regression, returns some continuous value, as in the addition example above. The second one, called classification, returns a value from a limited set of options like in the spam example. The simplest set of options being: ‘true’ or ‘false’.

Effectively we are giving up precise control over the function’s exact definition. Instead we move to the level of specifying what should come out given what we put in. What we gain from this is the ability to infer much more complex functions than we could reasonably write ourselves. This is what makes machine learning a powerful approach for many problems. Nevertheless, every convenience comes at a cost and machine learning is no exception.

Limitations

The main challenge for using a machine learning approach is that large amounts of data are required to train models, which can be costly to obtain. Luckily, recent years have seen an explosion in available data. More people are producing content than ever, and wearable electronics yield vast streams of data. All this available data makes it easier to train useful models, at least for some cases.

A second caveat is that training models on large amounts of data requires a lot of processing power. Fortunately, rapid advances in graphics hardware have led to orders of magnitude faster training of complex models. Additionally, these hardware resources can be easily accessed through cloud services, making things much easier.

A third downside is that, depending on the machine learning method, what happens inside of the model created can become opaque. What does the generated function do to get from input to output? It is important to understand the resulting model, to ensure it performs sanely on a wide range of inputs. Tweaking the model is more an art than a science, and the risk of harmful hidden biases in models is certainly realistic.

Applying machine learning is no replacement for software engineering, but rather an augmentation for specific challenges. Many problems are far more cost effective to approach by writing a simple set of explicitly written rules instead of endlessly tinkering with a machine learning model. Machine learning practitioners are best of not only knowing the mechanics of each specific method, but also whether machine learning is appropriate to use at all.

Conclusion

Many modern inventions would look like magic to people from one or two centuries ago. Yet, knowing how these really work shatters this illusion. A huge increase in the use of machine learning to solve a wide range of problems has taken place in the last decade. People unfamiliar with the underlying techniques often both under and overestimate the potential and the limitations of machine learning methods. This leads to unrealistic expectations, fears and confusion. From apocalyptic prophecies to shining utopias: machine learning myths abound where we are better served staying grounded in reality.

This is not helped by naming. Many people associate the term ‘learning’ with the way humans learn, and ‘intelligence’ with the way people think. Though sometimes a useful conceptual analogue, it is quite different from what these methods currently actually do. A better name would have been data-based function generation. However, that admittedly sounds much less sexy.

Nevertheless, machine learning at its core is not much more than generating functions based on input and, usually, output data. With this approach it can deliver fantastic results on narrowly defined problems. This makes it an important and evolving tool, but like a hammer for a carpenter: it is really just a tool. A tool that augments, rather than replaces, software engineering. Like a hammer is limited by laws of physics, machine learning is fundamentally limited by laws of mathematics. It is no magic black box, nor can it solve all problems. However, it does offer a way forward for creating solutions to specific real-world challenges that were previously elusive. In the end it is neither magic nor mundane, but is grounded in ways that you now have a better understanding of.

Resources

- Singh, S. (2002). Turk’s Gambit.

- Rusell, S. & Norvig, P. (2003). Artificial Intelligence.

- Halevy, A. & Norvig, P. & Pereira, F. (2010). The Unreasonable Effectiveness of Data.

- Lewis, T. G. & Denning, P. J. (2018). Learning Machine Learning, CACM 61:12.

- Graham, P. (2002). A Plan for Spam.

- O’Neil, C. (2016). Weapons of Math Destruction.

- Stack Overflow Developer Survey (2019).